Are you ready to ace your next data engineering interview with confidence? As companies increasingly adopt Apache Iceberg for managing huge analytic datasets, understanding its intricacies is becoming crucial for success in the field.

You’ll need to demonstrate your ability to handle complex data tasks, including managing table formats and understanding schema evolution. This guide is designed to help you prepare with detailed Apache Iceberg interview questions and answers, updated for 2025. With RoboApply’s resources, you can practice with mock interviews using our interview coach feature, ensuring you’re well-prepared to tackle real-world problems.

Key Takeaways

- Comprehensive guide to Apache Iceberg interview preparation

- Practical examples of Iceberg tables in production environments

- Detailed explanations to demonstrate technical understanding

- RoboApply’s interview coach for practicing Apache Iceberg concepts

- Importance of understanding Apache Iceberg for data roles

Understanding Apache Iceberg for Your Next Technical Interview

As you prepare for your next technical interview, understanding Apache Iceberg is crucial for success in data engineering roles. Apache Iceberg is an open table format designed specifically for massive analytic datasets, addressing many limitations of traditional data lake approaches.

What is Apache Iceberg and Why It Matters

Apache Iceberg is designed to provide a flexible and scalable solution for managing large datasets. It matters because it solves common data lake problems like atomicity, consistency, and performance at scale. With Apache Iceberg, you can efficiently manage your data and improve the performance of your data processing systems.

One of the key benefits of Apache Iceberg is its ability to support various data processing systems and integrate with existing data infrastructure. This makes it an attractive solution for companies dealing with large amounts of data.

How to Prepare for Apache Iceberg Questions with RoboApply

To prepare for Apache Iceberg questions in your technical interview, you can use RoboApply’s interview coach to practice explaining complex Apache Iceberg concepts in a clear and concise manner. RoboApply provides customized practice sessions focused on the specific Apache Iceberg topics most relevant to your target role.

By using RoboApply, you can improve your understanding of Apache Iceberg’s table format, schema evolution, and data management capabilities. You’ll be able to confidently discuss the business cases where Apache Iceberg provides significant advantages and how it supports various data processing systems.

Basic Apache Iceberg Interview Questions

As you prepare for your Apache Iceberg interview, it’s essential to understand the foundational concepts that drive this powerful data management system. Apache Iceberg is designed to address the complexities of managing large-scale data lakes, and interviewers typically focus on assessing your knowledge of its core features and capabilities.

What is Apache Iceberg and its Core Features?

Apache Iceberg is an open table format that brings reliability and performance to data lakes. Its core features include schema evolution, which allows you to change the structure of your data without rewriting existing files, and hidden partitioning, which enables efficient data organization without exposing partition details to users. Additionally, Apache Iceberg supports time travel, allowing you to query historical data snapshots.

- Schema evolution for flexible data structure changes

- Hidden partitioning for efficient data organization

- Time travel for querying historical data snapshots

How Does Apache Iceberg Differ from Traditional Data Lake Formats?

Apache Iceberg differs significantly from traditional data lake formats like Hive tables in terms of metadata management and file organization. Unlike Hive, which relies on a centralized metastore, Apache Iceberg stores metadata directly in its table files, providing greater flexibility and scalability. This approach also enables more efficient handling of large datasets and complex queries.

The key differences include:

- Decentralized metadata management

- Improved file organization for better performance

- Enhanced support for concurrent reads and writes

Explain the Key Components of Apache Iceberg

The key components of Apache Iceberg include the table format, which defines how data is stored and managed, and the metadata layer, which handles information about the data, such as schema and partition details. Understanding these components is crucial for appreciating how Apache Iceberg achieves its performance and reliability.

“Apache Iceberg’s architecture is designed to provide a robust and scalable solution for managing large-scale data lakes.”

What Problems Does Apache Iceberg Solve?

Apache Iceberg addresses several common challenges in data lake management, including the “small files problem” and partition evolution challenges. By providing a more efficient and flexible way to manage data, Apache Iceberg enables organizations to handle complex data processing tasks with greater ease and performance.

Some of the key problems solved by Apache Iceberg include:

- Efficient handling of small files

- Flexible partition evolution

- Improved performance for large-scale data processing

Apache Iceberg Architecture Questions

To succeed in data engineering interviews, you need to grasp Apache Iceberg’s architecture. It’s a complex system designed to manage large-scale data lakes efficiently. Understanding its components and how they work together is crucial for answering architectural questions.

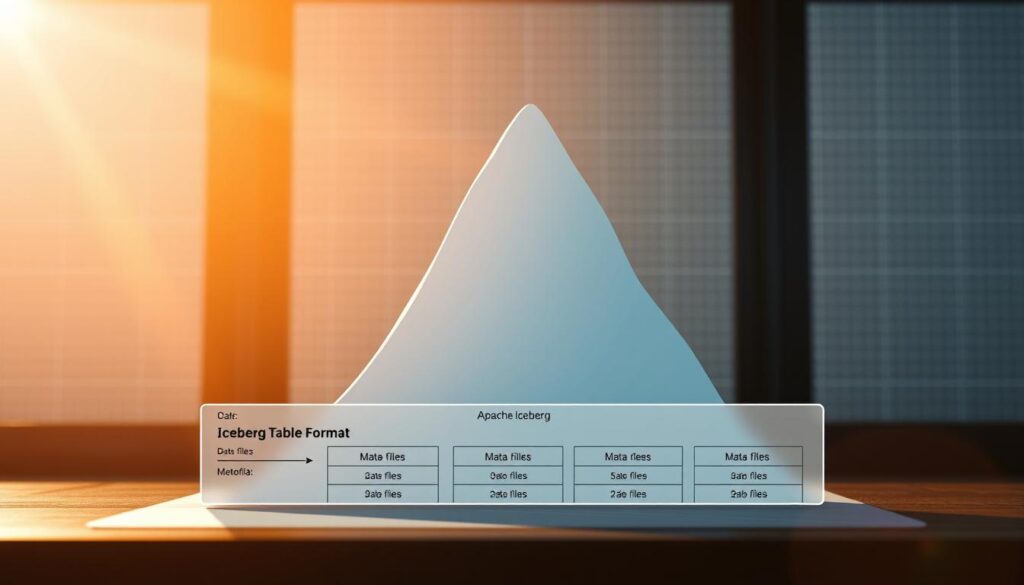

Describe the Table Format in Apache Iceberg

Apache Iceberg’s table format is designed to be flexible and scalable. It stores data in a way that allows for efficient querying and management. The table format includes metadata and data files, which are managed through manifest files. This structure enables features like schema evolution and snapshot isolation. As noted by the Apache Iceberg documentation, “Iceberg’s table format is designed to be highly performant and adaptable to changing data lake requirements.”

How Does Apache Iceberg Handle Metadata?

Metadata management is a critical aspect of Apache Iceberg. It uses a combination of metadata files and manifest files to track data files and their schema. This approach allows for efficient metadata management and supports features like time travel and snapshot isolation. “Apache Iceberg’s metadata handling is a key factor in its ability to support complex data lake operations,” as it enables the tracking of changes at the data level.

Explain Iceberg's File Layout and Organization

Iceberg’s file layout is designed to optimize query performance. Data files are organized in a hierarchical structure, with metadata files tracking their location and schema. This organization enables efficient data pruning during query execution, improving overall performance. The file layout also supports concurrent reads and writes, making it suitable for high-traffic data environments.

In conclusion, understanding Apache Iceberg’s architecture is essential for data engineering professionals. Its table format, metadata handling, and file layout are all designed to support efficient and scalable data management. By mastering these concepts, you can better navigate technical interviews and demonstrate your expertise in managing complex data lakes.

Data Management in Apache Iceberg

Apache Iceberg’s data management capabilities are crucial for handling large-scale data lakes efficiently. You need to understand how it manages data files, utilizes snapshots, and enables time travel to effectively leverage its features.

How Does Apache Iceberg Handle Data Files?

Apache Iceberg manages data files through a sophisticated tracking mechanism that monitors file additions, removals, and replacements. This ensures data consistency and reliability across the data lake. By maintaining a metadata layer, Iceberg can efficiently manage the lifecycle of data files without compromising performance.

Explain Snapshots in Apache Iceberg

Snapshots in Apache Iceberg represent a complete point-in-time view of a table. Each snapshot captures the state of the data at a specific moment, allowing for efficient versioning and auditing. Iceberg’s snapshot mechanism is designed to be lightweight, avoiding data duplication while maintaining historical data integrity.

How Does Time Travel Work in Apache Iceberg?

Apache Iceberg’s time travel feature enables querying historical versions of data by referencing specific snapshots. This capability is invaluable for auditing, debugging, and analyzing data changes over time. By leveraging snapshots, Iceberg provides a powerful tool for understanding data evolution, and you can practice explaining these concepts clearly with RoboApply’s interview coach.

Schema Evolution and Partitioning Questions

Understanding how Apache Iceberg supports schema evolution and handles partitioning is vital for acing your interview. Schema evolution is a critical feature that allows you to adapt your data structures to changing requirements without significant overhead.

How Does Apache Iceberg Support Schema Evolution?

Apache Iceberg supports schema evolution by allowing you to add, drop, or rename fields without rewriting the entire data set. This is achieved through its metadata layer, which keeps track of the schema changes. You can evolve your schema as needed, and Iceberg will manage the compatibility between different versions of your table structure.

To prepare for interview questions on this topic, you should be ready to explain how Apache Iceberg handles schema changes without rewriting data files, a key advantage over traditional data lake approaches. You can practice explaining these concepts with relevant examples using RoboApply’s interview coach.

Explain Partitioning Strategies in Apache Iceberg

Apache Iceberg offers flexible partitioning strategies that allow you to organize your data effectively. It supports various partition transforms, including identity, bucket, truncate, year, month, day, and hour. Understanding how to choose the right partitioning strategy for your use case is crucial.

You should be prepared to discuss how Apache Iceberg’s partitioning approach differs from Hive-style partitioning and the benefits this provides. Additionally, understanding how session-level configurations affect schema evolution operations demonstrates your detailed knowledge of Apache Iceberg’s implementation.

How to Handle Schema Changes in Production?

Handling schema changes in production environments requires careful planning and execution. You should be prepared to discuss how to manage schema compatibility between different versions of a table’s structure and how to handle partition evolution in Apache Iceberg. This includes understanding how to adapt your data structures to changing requirements without disrupting your production workflows.

Apache Iceberg Integration Questions

To succeed in your Apache Iceberg interview, you need to demonstrate knowledge of its integration capabilities. Apache Iceberg is designed to work seamlessly with various data processing engines, making it a versatile tool for managing complex data environments.

How Does Apache Iceberg Integrate with Spark?

Apache Iceberg integrates with Apache Spark through its Spark module, which allows you to read and write Iceberg tables using Spark SQL and the DataFrame API. This integration enables you to leverage Spark’s processing power while managing your data with Iceberg. For instance, you can use Spark to perform complex queries on Iceberg tables, and Iceberg will handle the metadata management efficiently. To learn more about effective data integration strategies, visit RoboApply’s guide.

Explain Catalog Support in Apache Iceberg

Apache Iceberg supports multiple catalog implementations, including Hive, JDBC, and REST catalogs. This flexibility allows you to choose the catalog that best fits your organization’s needs. For example, you can use the Hive catalog for metadata management in a Hadoop ecosystem or a JDBC catalog to store metadata in a relational database. Iceberg’s catalog support enables you to manage your table metadata efficiently and discover tables across different environments.

- Catalogs provide a way to discover and manage Iceberg tables.

- Different catalog implementations offer flexibility in metadata storage.

- Iceberg’s catalog support is crucial for managing complex data environments.

How to Query Apache Iceberg Tables?

Querying Apache Iceberg tables can be done using SQL syntax, leveraging Iceberg-specific features. You can use Spark SQL or other query engines that support Iceberg to execute queries on Iceberg tables. Iceberg’s support for SQL queries makes it easy to work with data stored in Iceberg tables, and its integration with Spark enables you to perform complex data analysis tasks.

By understanding how Apache Iceberg integrates with Spark, its catalog support, and how to query Iceberg tables, you can demonstrate your ability to work with this powerful data management tool. Practice explaining these concepts with specific code examples using RoboApply’s interview coach to boost your confidence in your Apache Iceberg interview.

Advanced Apache Iceberg Interview Questions

When interviewing for a position that involves working with Apache Iceberg, you can expect to be asked advanced questions that test your knowledge of its features and capabilities. These questions are designed to assess your understanding of complex concepts and how they can be applied in real-world scenarios.

Explain Hidden Partitioning in Apache Iceberg

Apache Iceberg’s hidden partitioning feature allows for efficient data organization without requiring explicit partition management from users. This is achieved by automatically handling the partitioning based on the table’s schema, making it easier to manage large datasets. You should be prepared to discuss how hidden partitioning improves query performance and simplifies data management.

- Hidden partitioning eliminates the need for manual partition management.

- It improves query performance by optimizing data retrieval.

- Apache Iceberg’s hidden partitioning is based on the table’s schema.

How Does Apache Iceberg Handle Concurrent Writes?

Apache Iceberg uses an optimistic concurrency control mechanism to handle concurrent writes. This approach allows multiple writers to operate simultaneously without conflicts, ensuring data consistency and integrity. You should be prepared to explain how this mechanism prevents the “lost update” problem common in other data lake formats.

For more information on how Apache Iceberg handles performance metrics, you can visit RoboApply’s guide on key performance indicators for systems.

Describe Performance Optimization Techniques in Apache Iceberg

Apache Iceberg employs several performance optimization techniques, including data pruning, metadata filtering, and partition evolution. These techniques enable efficient data retrieval and reduce the overhead of query operations. You should be prepared to discuss how these techniques are used to optimize Spark queries through predicate pushdown and partition pruning.

Some key performance optimization techniques in Apache Iceberg include:

- Data pruning to reduce the amount of data scanned.

- Metadata filtering to optimize query planning.

- Partition evolution to adapt to changing data distributions.

Mastering Your Apache Iceberg Interview with RoboApply

With RoboApply, you can master Apache Iceberg concepts and confidently tackle technical interviews. The platform offers a range of tools to help you prepare, including an interview coach feature that provides specialized practice sessions for Apache Iceberg technical interviews.

You can create customized practice sessions that focus on specific Apache Iceberg use cases relevant to your target role. RoboApply’s AI-powered feedback helps refine your explanations of complex concepts, ensuring clear articulation during interviews.

- Practice with realistic mock interview scenarios that simulate technical interviewers’ questions.

- Track your progress as you master different aspects of Apache Iceberg.

- Use the session recording feature to review your explanations and identify areas for improvement.

By leveraging RoboApply’s comprehensive tools, you’ll be well-prepared to tackle Apache Iceberg interviews at any level, from entry-level data engineering roles to senior positions requiring deep expertise.

FAQ

What is the primary function of the catalog in Apache Iceberg?

The catalog in Apache Iceberg serves as a central repository that stores metadata about the tables, including their schema, partition information, and snapshot history, allowing for efficient management and querying of the data.

How does Apache Iceberg support schema evolution?

Apache Iceberg supports schema evolution by allowing changes to the schema without rewriting the existing data files, thus enabling the addition or removal of columns, data type changes, and other schema modifications while maintaining data consistency.

What are the benefits of using snapshots in Apache Iceberg?

Snapshots in Apache Iceberg provide a way to maintain a versioned history of the data, enabling features like time travel, which allows users to query the data as it was at a specific point in the past, and ensuring data consistency across different queries.

How does partitioning work in Apache Iceberg?

Partitioning in Apache Iceberg is a strategy used to organize data into more manageable pieces based on certain criteria, such as date or region, which can significantly improve query performance by reducing the amount of data that needs to be scanned.

Can Apache Iceberg handle concurrent writes?

Yes, Apache Iceberg is designed to handle concurrent writes through its use of optimistic concurrency control, which allows multiple writers to operate on the same table simultaneously without conflicts, ensuring data integrity and consistency.

How does Apache Iceberg integrate with Spark?

Apache Iceberg integrates with Spark by providing a Spark data source that allows users to read and write Iceberg tables using Spark SQL and DataFrame APIs, enabling seamless interaction between Spark and Iceberg.

What is hidden partitioning in Apache Iceberg?

Hidden partitioning in Apache Iceberg is a feature that allows users to partition their data without having to explicitly include the partition columns in their queries, making it easier to work with partitioned data while maintaining query performance.

How does Apache Iceberg optimize query performance?

Apache Iceberg optimizes query performance through various techniques, including data clustering, partition pruning, and the use of metadata to reduce the amount of data that needs to be scanned, resulting in faster query execution times.